October 11, 2020

【Press Release】Official Commercial Launch of Efficiera Ultra-Low Power AI Inference Accelerator IP Core

A New Standard for Edge AI with the World's First Hardware and Software Platform Utilizing Extremely Low Bit Quantization

LeapMind, Inc. (Location: Shibuya, Tokyo; CEO: Soichi Matsuda; hereinafter referred to as "LeapMind"), which provides solutions for companies that utilize deep learning technology, an elemental technology of AI (Artificial Intelligence), is pleased to announce that it has officially launched the commercial version of its Efficiera ultra-low power consumption AI inference accelerator IP core, which incorporates deep learning capabilities into various edge devices.

Overview of Commercial Version of Efficiera

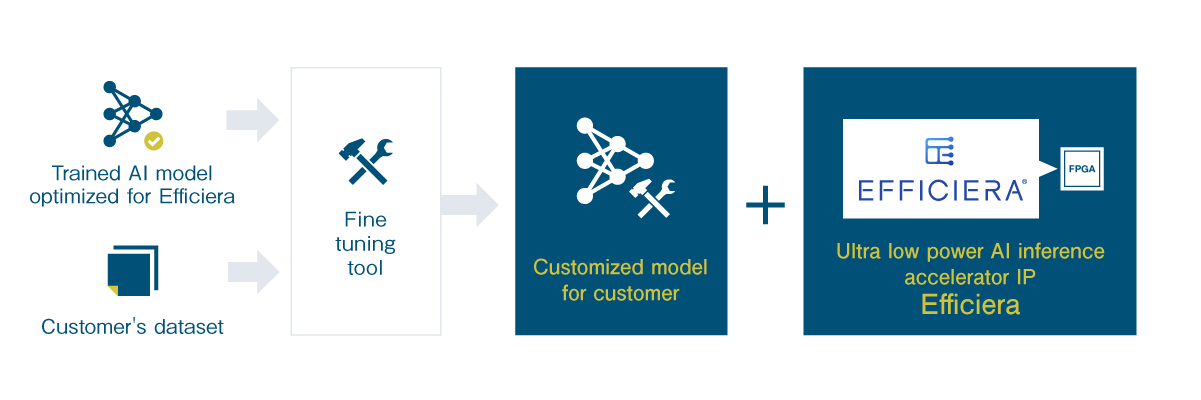

Efficiera is an ultra-low power AI inference accelerator IP core that is specialized for CNN (*1) inference operations. Deep learning capabilities can be incorporated into various edge devices including construction equipment and other industrial machinery, surveillance cameras, and broadcasting equipment. Efficiera has been described as an RTL for use with both ASIC and FPGA, but at the time of this release it has a semiconductor IP design suitable for FPGA circuits. It uses extremely low bit quantization (*2) to provide trained object detection AI models optimized for Efficiera. By using these models in combination, performance of Efficiera can be maximized.

Figure 1: Provided Model and How It is Used

Features of Efficiera

LeapMind has developed Efficiera based on knowledge gained from the co-creation of machine learning solutions with more than 150 companies, as well as through its research and development of technologies including extremely low bit quantization. It offers the following 4 features.

- Energy Efficient By minimizing the volume of data transmitted and the number of bits, the power required for convolution operations is reduced.

- High Performance By reducing the arithmetic logic complexity, the number of operation cycles is reduced and the arithmetic capacity per area/clock rate is improved.

- Small Footprint By minimizing the number of operated bits, the circuit area and SRAM size per arithmetic logic unit are minimized.

- Scalable Since the computing performance can be fine-tuned by adjusting the circuit configuration, it is possible to optimize the configuration and maximize the performance of Efficiera according to the task being performed.

We also offer the following software and functions to allow you to get the most out of Efficiera.

- Optimized, Trained AI Models We provide trained models optimized for Efficiera.

- Fine Tuning We also provide tools for "fine tuning" – reusing part of an existing model to help train a new model for your specific needs. By leveraging the fine-tuning tool and existing models, it is possible to build new models with less data and without the trouble of creating a new model from scratch.

Benefits of Efficiera with FPGA

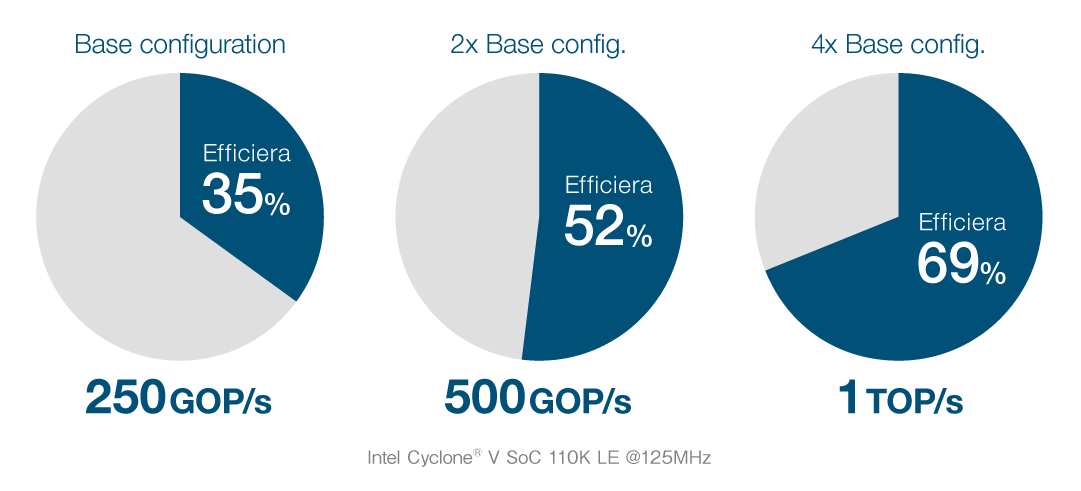

Mount on small FPGAs The small footprint of Efficiera allows it to be mounted on small FPGAs. In the minimum configuration, it can be mounted with about 1/3 of the ALM resources of Intel's Cyclone® V SoC with 110K LEs, and the performance can be doubled or quadrupled as the resources allow. Resource usage and theoretical performance at 125 MHz are shown in Figure 2.

Figure 2: Resource Usage and Theoretical Performance

Add AI capabilities without changing the hardware design Since the IP core itself is small, Efficiera can be integrated on the same SoC FPGA device as the CPU and image input circuit. This allows a lower BoM (Bill Of Materials) for products equipped with AI capabilities. For example, by mounting Efficiera in the free space of an existing FPGA design, AI capabilities can be added without changing the number of components.

Mass-produced boards to shorten development lead-time LeapMind is promoting the Efficiera FPGA Partner Program, and is working to make Efficiera compatible with even more mass-produced boards as part of this program. This will shorten development lead-time for mass-produced products equipped with deep learning capabilities, and enable the mass production of on-device AI solutions. The following hardware platforms are currently supported. KEIm-CVSoC: These SoMs, developed for mass production by Kondo Electronics Industry, are equipped with Intel® FPGAs. They are suited to a wide range of applications from technological studies to product prototyping to mass production. So-One Module: This is the world's smallest module equipped with an MPSoC, developed by PALTEK. The MPSoC, from Xilinx, is well-suited to edge computing.

Interview with General Manager Yamazaki on This Release

"We have made full use of our knowledge and experience to develop this commercial version of Efficiera, in order to make people's lives richer and more convenient through deep learning. We are proud that Efficiera has the potential to revolutionize various aspects our daily lives. For example, we will enrich our lives by protecting the safety of small children, supporting secure lifestyles for the elderly, and monitoring normally inaccessible places that form our social infrastructure. In order for Efficiera to be used in such situations, our goal is for it to become an AI standard for on-device solutions smaller even than a smartphone or laptop. To achieve this standard, we have searched for and implemented solutions to a variety of issues encountered in our extensive project experience. One of these is extremely low bit quantization. We established extremely low bit quantization in anticipation of the need for IP cores that require few resources, so that they can be incorporated into small devices. There are few companies with knowledge of both embedded devices (hardware) and trained AI models (software), and that is why this has not yet been put into practical application. I am convinced that LeapMind is a rare company that can bring about practical application at an accelerated pace by approaching the issue from both sides. In the future, we will continue to improve our IP cores to provide suitable performance at ultra-low power. Regarding software, we would like to support general frameworks so that our customers can utilize them along with extremely low bit quantization. Together with FPGA suppliers, OS support, and our partners in AI development, we will further accelerate our activities to secure business and enrich our lives."

1)CNN: Convolutional Neural Network. This type of deep learning is commonly used for image and video recognition. 2)Extremely low bit quantization: Technology developed by LeapMind to reduce the weight of deep learning models. In general, using a numerical representation with a wide bit width such as 32 or 16 bits improves the accuracy of the inference results. However, it also increases the scale (area) of the arithmetic circuit and increases the processing time and power consumption. On the other hand, it is known that narrowing the bit width decreases the size of the circuit and reduces both processing time and power consumption. However, accuracy also decreases, and this has been an issue in achieving both energy efficiency and a small footprint. LeapMind has developed extremely low bit quantization that enables extreme quantization such as 1 bit for weight (weight coefficient) and 2 bits for activation (intermediate data) while maintaining accuracy, resulting in a significant reduction in model size and faster speed, while maximizing power and area efficiency.

*Intel, the Intel logo, the Intel Inside logo, and other names and logos are trademarks of Intel Corporation or its subsidiaries in the United States and/or other countries. *Product names in this text are trademarks or registered trademarks of LeapMind, Inc. *The information in this press release is current as of the date of publication.

About LeapMind, Inc.

LeapMind, Inc. was founded in 2012 with the corporate philosophy of "bringing new devices that use machine learning to the world". Total investment in LeapMind to date has reached 4.99 billion yen. The company's strength is in extremely low bit quantization for compact deep learning solutions. It has a proven track record of achievement with over 150 companies, centered in manufacturing including the automobile industry. It is also developing its Efficiera semiconductor IP core, based on its experience in the development of both software and hardware.

Head office: Shibuya Dogenzaka Sky Building 5F, 28-1 Maruyama-cho, Shibuya-ku, Tokyo 150-0044 Representative: Soichi Matsuda, CEO Established: December 2012 URL:https://leapmind.io/en/

Press Relations Contact Information LeapMind, Inc. Takai, Marketing Communication Division Tel:03-6696-6267 E-mail: pr@leapmind.io

Inquiries about Products and Services LeapMind, Inc. Business Development Team, Blueoil Division Tel:03-6696-6267 E-mail:business@leapmind.io Official website:https://leapmind.io/products/efficiera-ip/